Warning

Please note that the documentation you are currently viewing is for an older version of our technology. While it is still functional, we recommend upgrading to our latest and more efficient option to take advantage of all the improvements we’ve made.

Integrate AIMMS with Models Built in Languages Like Python or R

The usage of both optimization and machine learning algorithms in decision support applications is growing steadily. One example is to use a forecasting model to predict the expected demand and provide that as an input to a MIP model. Python and R are two of the most popular languages used in the data science community for developing algorithms like predictive models, clustering models etc. AIMMS is optimized for the development of apps based on MIP models and in this article, we will show you how to empower your AIMMS apps with machine learning models built in these other languages. This will let you leverage the availability of libraries like scikit-learn, NumPy, Tidyverse R packages for data science and others in AIMMS as well.

Overview

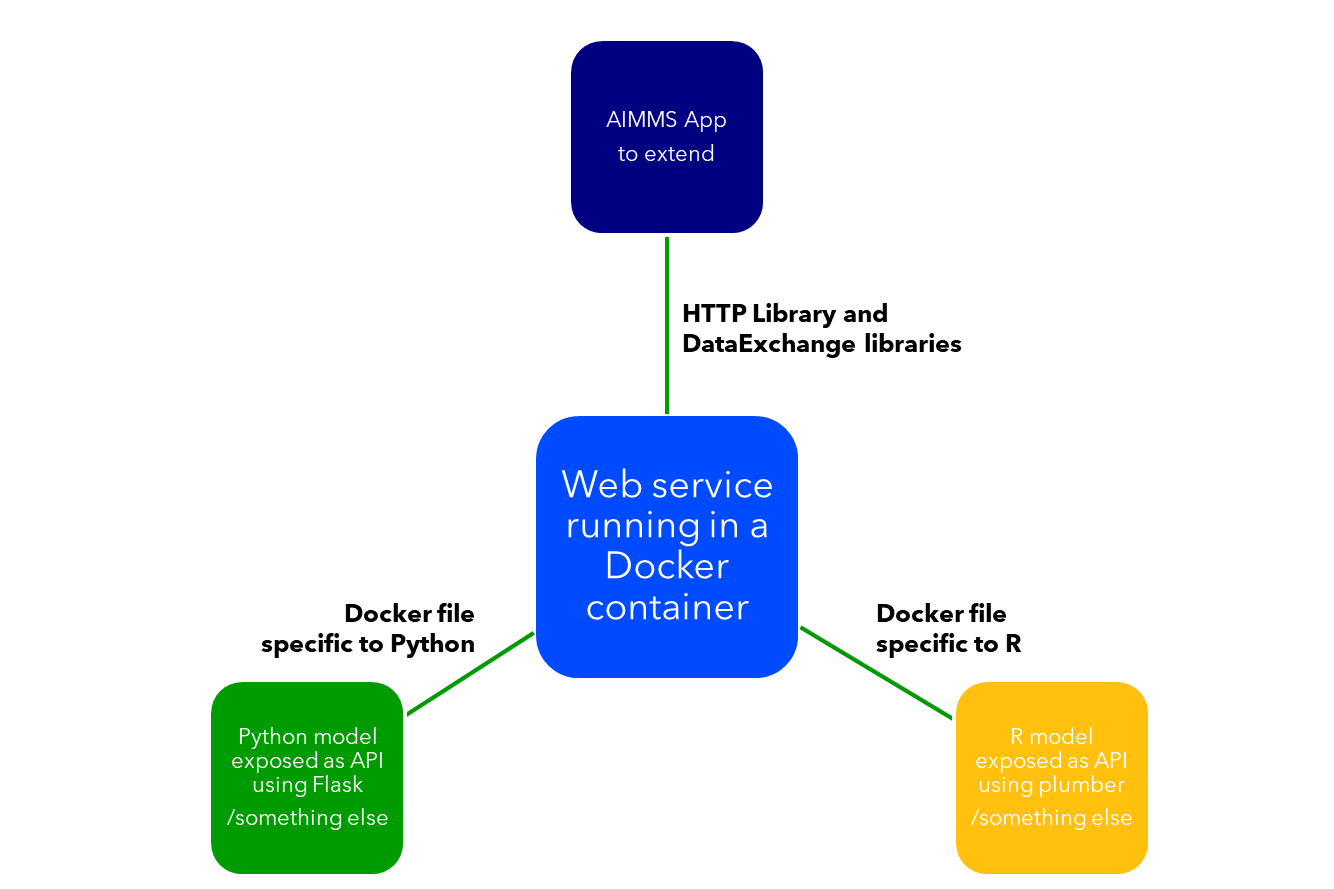

The HTTP Library section contains examples like Call Google Maps API which show how to call web services in AIMMS. We will use the same principles to extend an AIMMS app with models built in Python or R by exposing those models as web services.

We will publish a series of how-to articles explaining how AIMMS apps can be extended with models built in Python or R. While our examples will be limited to these two languages at this moment of time, we believe the same principles can be extended to most other languages used by the data science community (such as Julia). There are many libraries/packages available in both Python and R to deploy models as web services. The choice of libraries/packages used by our team is arbitrary.

Note

These articles are not intended as a tutorial/endorsement for using any particular package or software tool. We show just one of many ways of creating web services in Python/R. Choose an architecture that best fits your needs and/or the skill set available in your team.

A diagram of the architecture we will use in our examples is shown below.

The different steps in this process are as below:

Develop the model in Python/R as usual.

Expose the model as a web service (example: a REST API) which takes in input data from the client and returns the model results. Flask in Python and Plumber in R are example libraries that could be used for this purpose.

Use the DataExchange library to construct AIMMS procedures which read and write JSON files (or CSV/XML) compatible with the model built in Step 1.

Use the HTTP library functions to call the API built in Step 2 from AIMMS. This step will use the AIMMS procedures in Step 3 to prepare the data for the API and retrieve the results back into AIMMS.

(Optional) If there are numerous use cases, it could be helpful to maintain a library of functions in Python/R/AIMMS to facilitate the data transformations.

By following the above steps, you can have your Python/R model available as a local web service running on your computer.

Development Tools

Postman is a useful tool to have while working with APIs. It acts an API client letting you easily send REST API requests. You will use this tool to test the API.

Docker Desktop is a tool for building and running containerized applications. You will use this tool to deploy your API by building a docker image.

Deployment

When creating an API using libraries like Flask or Plumber, the API will be running on your local machine and can be accessed at an address like - http://localhost:8000/.

You can access this URL in your browser or in Postman, AIMMS or any other application acting as an API client.

If you have an AIMMS PRO On-Premise running on the same machine, the API can still be accessed using the same URLs. However, it is very uncommon that a model developer uses a for development a machine that hosts the AIMMS PRO On-Premise as well. In addition to that, this is not a viable option if your AIMMS app is published on AIMMS Cloud. To make this possible, you will need to deploy the API as well so that is accessible through a global URL instead of just a local one.

One way of such deployment is to containerize the API using Docker. A benefit of using docker is that once you have a Docker image, you can use it to run/deploy the API in multiple ways.

Run locally using Docker Desktop

Deploy AIMMS PRO On-Premise with Kubernetes

Deploy on Microsoft Azure as an App Service / Web App or other cloud offerings like AWS ECS and Google Cloud.

The image also contains all the dependencies of your Python/R model, so any computer with Docker Desktop installed can run it. We decided to explore the usage of Docker in our examples to take advantage of this reproducibility and flexibility.

Examples

Using scikit-learn’s KMeans clustering in an AIMMS app to find centers of gravity in a demand network: How to Connect AIMMS with Python.

Create a Sankey Diagram using R to visualize network flows in an AIMMS app: How to Connect AIMMS with R.